At last! A tool for evaluating the usability of video games

Three years ago we began a project to create a tool for evaluating the usability of video games. We told you about it here . This project, carried out in the context of a doctoral thesis is almost at an end. Let’s see what we came up with.

Reminder: A bit background.

There aren’t many ways to evaluate the User Experience (UX) of a game. In most cases we conduct User Tests. These are efficient and objective because the players we recruit are not involved in the development. But they can also be expensives because these players need to be recruited and rewarded not to mention the time needed to analyse the collected data.

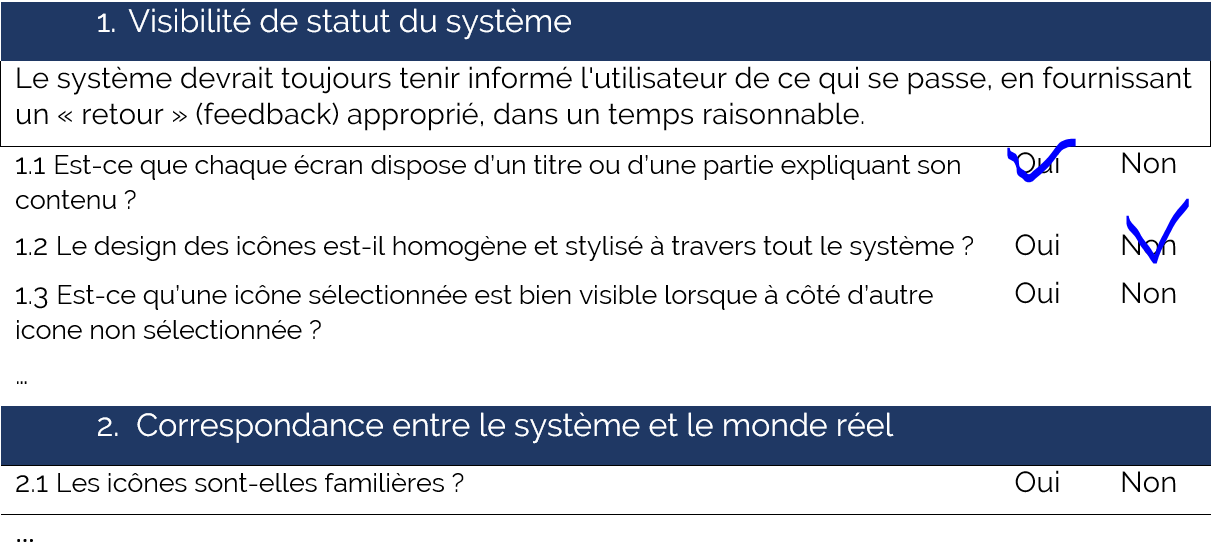

In the web and software user experience domain, we sometimes use an alternative method, cheaper and faster : heuristics evaluation. It involves using a set of recognized usability criteria (heuristics) against which testers (UX professionnals or developpers) can tick yes or no, according to whether the interface respects it or not.

These heuristics needs to be precises, easy to understand and wisely chosen so that even non specialists could use it efficiently.

They are usually grouped into core concepts. The usability criteria are widely accepted to be what defines the optimum user-friendly experience and are used to standardize the User Research field. The criteria we specifically have in mind are those published by J. M. Christian Bastien and Dominique L. Scapin, and the ten usability heuristics published by Jakob Nielsen.

Figure 1 – Extract of a Xerox criteria set, based on Nielsen criteria plus additional recommendations

Okay. What next?

We translated, sorted and analysed more than 1300 recommendations across 28 sets of criteria, from dozens of video game tests and almost a hundred articles on the subject. We also analysed around 80 video games of all kinds had our evaluation tool tested by students, developers and usability experts. Now we are finally ready to show some concrete results. These consist of a set of 20 criteria grouped into three conceptual areas of analysis.

Explanation

The first area is Usability: Using the ISO standard 9241-11 definition, this area is used for assessing the subject as a Human Machine Interface, that is to say human-computer interfaces enabling their users to carry out specific tasks efficiently and satisfactorily. We do not cloud the issue here by discussing the contradictions between leisure and productivity or between challenge and efficiency, because the analysis here relates purely to interactivity and does not concern the video gaming aspects.

The second is Playability: According to Professor Sebastien Genvo, playability is a result of the nature and character of a game which enables and encourages a player to adopt a “playfull mindset”, inciting him or her to test, experiment, and to investigate possibilities. This implies that the object of analysis presents a certain contingency in the way it responds to the actions of the user and that the user is able to make sensible and meaningful choices. Therefore this line of analysis considers the object of the study to have playing potential, and the features of a game without necessarily being an actual game. It is entirely possible to evaluate the playability of a web site or a software application in so much as it allows users to experiment and discover its functionality and features.

The third area is Gameplay: this is another of those polysemous terms used indiscriminately. Here we use it to mean “play the game”. The term itself is a combination of the two English words, ‘game’ and ‘play’. Game is used here to mean a structure built on a collection of rules, and play is the activity itself, implying freedom of movement without necessarily being constrained to a predetermined structure or set of rules. This concept treats the object as a video game, with rules and a mission to be accomplished, but it looks at the way the player discovers the game’s functionality while playing it. It’s precisely by playing the game that the player will acquire the necessary ‘playfull skills’. The criteria used evoke the themes addressed by various researchers, such as the role of failure studied by Jesper Juul, and skills acquisition studied in the work of Daniel Cook and that of Arsenault and Perron; or the importance of the ‘model player’ and the ‘fictional world’ highlighted in the work of Professor Sebastien Genvo.

Figure 2 – A glance at the 20 criteria in the form of illustrated cards

As a visual aid for using the tool, the 20 criteria and their respective heuristics are presented in the form of large-format recto-verso cards with, on one side, the definition of the criterion and of its heuristics, and on the other side, an illustrated example based on real cases.

And now …

After these 3 years of research, our tool is now ready. With it, we can help developpers throughout the whole video games production process, conducting quick and efficient interventions each step along the way. Our analysis allows us to point out areas for improvement in details (unseenable part of the interface, …) as much as in a broader way (a deep gameplay problem, …). It can also highlight the game’s assets (good damage feedbacks, players fitting difficulty, …). In a nutshell, our analysis is like an audit, it brings a UX centered expertise on critical points.

We don’t intend to present the 20 criteria to you in detail here. However, we are going to publish a series of blog posts where we’ll analyse a specific game and assess its performance against one or two of our tool’s criteria. Our first post will be next week using the game Paladin, Champions of the Realm.